I want to convert coordinates of traffic lights into camera coordinates system but I was not able to do that. I used similar code to the function used to convert from point could to the camera but when I draw the points on the image, I found that they are not accurate. Could you please help me to figure out what is wrong with my code?

Here is my code:

import os

import json

import random

from typing import Dict, List, Tuple, Optional, Union

import descartes

from tqdm import tqdm

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

from matplotlib.patches import Rectangle, Arrow

from matplotlib.axes import Axes

from matplotlib.figure import Figure

from mpl_toolkits.axes_grid1.inset_locator import mark_inset

from PIL import Image

from shapely.geometry import Polygon, MultiPolygon, LineString, Point, box

from shapely import affinity

import cv2

from pyquaternion import Quaternion

from nuscenes.nuscenes import NuScenes

from nuscenes.utils.geometry_utils import view_points

from nuscenes.nuscenes import NuScenes

nusc = NuScenes(version=‘v1.0-mini’, verbose=False,dataroot=’/s/red/a/nobackup/vision/nuScenes/data/sets/nuscenes’)

camera_channel = ‘CAM_FRONT’

patch_radius=50

sample_record = nusc.get(‘sample’, sample_token)

scene_record = nusc.get(‘scene’, sample_record[‘scene_token’])

log_record = nusc.get(‘log’, scene_record[‘log_token’])

log_location = log_record[‘location’]

nusc_map = NuScenesMap(dataroot=’/s/red/a/nobackup/vision/nuScenes/data/sets/nuscenes’, map_name=log_location)

cam_token = sample_record[‘data’][camera_channel]

cam_record = nusc.get(‘sample_data’, cam_token)

cam_path = nusc.get_sample_data_path(cam_token)

im = Image.open(cam_path)

im_size = im.size

cs_record = nusc.get(‘calibrated_sensor’, cam_record[‘calibrated_sensor_token’])

cam_intrinsic = np.array(cs_record[‘camera_intrinsic’])

layer_names = [‘traffic_light’]

cam_token = sample_record[‘data’][camera_channel]

cam_record = nusc.get(‘sample_data’, cam_token)

cam_path = nusc.get_sample_data_path(cam_token)

im = Image.open(cam_path)

im_size = im.size

cs_record = nusc.get(‘calibrated_sensor’, cam_record[‘calibrated_sensor_token’])

cam_intrinsic = np.array(cs_record[‘camera_intrinsic’])

Retrieve the current map.

poserecord = nusc.get(‘ego_pose’, cam_record[‘ego_pose_token’])

ego_pose = poserecord[‘translation’]

box_coords = (

ego_pose[0] - patch_radius,

ego_pose[1] - patch_radius,

ego_pose[0] + patch_radius,

ego_pose[1] + patch_radius,

)

records_in_patch = nusc_map.get_records_in_patch(box_coords, layer_names, ‘intersect’)

Init axes.

fig = plt.figure(figsize=(9, 16))

ax = fig.add_axes([0, 0, 1, 1])

ax.set_xlim(0, im_size[0])

ax.set_ylim(0, im_size[1])

ax.imshow(im)

near_plane = 1e-8

Retrieve and render each record.

for layer_name in layer_names:

for token in records_in_patch[layer_name]:

record = nusc_map.get(layer_name, token)

line = nusc_map.extract_line(record['line_token'])

if line.is_empty: # Skip lines without nodes

continue

xs, ys = line.xy

points=np.array([[record['pose']['tx']],[record['pose']['ty']],[record['pose']['tz']]])

# Transform into the ego vehicle frame for the timestamp of the image.

points = points - np.array(poserecord['translation']).reshape((-1, 1))

points = np.dot(Quaternion(poserecord['rotation']).rotation_matrix.T, points)

# Transform into the camera.

points = points - np.array(cs_record['translation']).reshape((-1, 1))

points = np.dot(Quaternion(cs_record['rotation']).rotation_matrix.T, points)

# Remove points that are partially behind the camera.

depths = points[2, :]

behind = depths < near_plane

if np.all(behind):

continue

# Grab the depths before performing the projection (z axis points away from the camera).

depths1 = points[2, :]

# Take the actual picture (matrix multiplication with camera-matrix + renormalization).

points = view_points(points[:3,:], cam_intrinsic, normalize=True)

depths = points[2, :]

print(points)

# Skip polygons where all points are outside the image.

# Leave a margin of 1 pixel for aesthetic reasons.

inside = np.ones(depths.shape[0], dtype=bool)

inside = np.logical_and(inside, points[0, :] > 1)

inside = np.logical_and(inside, points[0, :] < im.size[0] - 1)

inside = np.logical_and(inside, points[1, :] > 1)

inside = np.logical_and(inside, points[1, :] < im.size[1] - 1)

if np.any(np.logical_not(inside)):

continue

points = points[:2, :]

plt.scatter(points[0], points[1], c=nusc_map.explorer.color_map['walkway'], s=70)

Display the image.

plt.axis(‘off’)

ax.invert_yaxis()

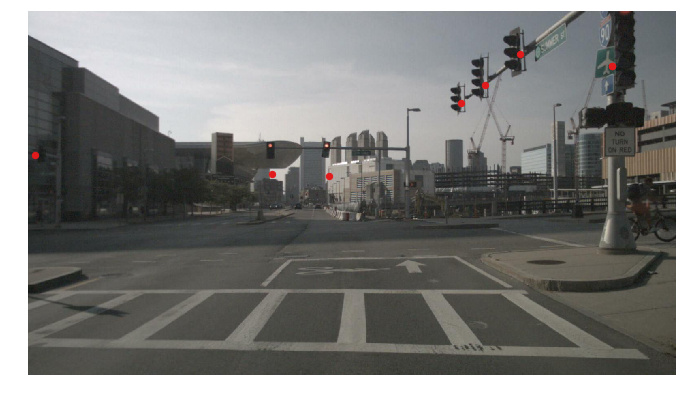

Here are some example of the plots: