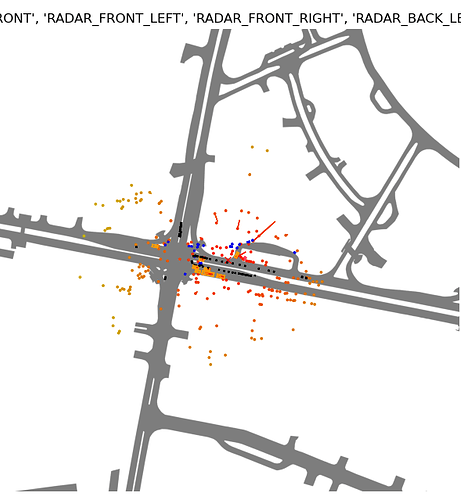

I sort of fixed it by making a function that does all the transformations on point clouds and the map that normally get done in render_sample_data, RadarPointCloud.from_file_multisweep and render_egocentric_map. It returns a correct plot (shown below), but it’s far from an elegant solution.

def transform_pc_and_map(self, sample_token: str, axes_limit: int = 150, base_directory: str = '',

use_contour: bool = True, do_plot: bool = False) -> tuple:

def crop_image(image: np.array,

x_px: int,

y_px: int,

axes_limit_px: int) -> np.array:

x_min = int(x_px - axes_limit_px)

x_max = int(x_px + axes_limit_px)

y_min = int(y_px - axes_limit_px)

y_max = int(y_px + axes_limit_px)

cropped_image = image[y_min:y_max, x_min:x_max]

return cropped_image

# nuscenes stuff

scene_token, log_token = self.__tokens_from_sample(sample_token)

current_sample_record = self.nusc.get('sample', sample_token)

ref_channel = 'LIDAR_TOP'

ref_sd_token = current_sample_record['data'][ref_channel]

ref_sd_record = self.nusc.get('sample_data', ref_sd_token)

ref_pose_record = self.nusc.get('ego_pose', ref_sd_record['ego_pose_token'])

ref_cs_record = self.nusc.get('calibrated_sensor', ref_sd_record['calibrated_sensor_token'])

radar_modalities = ('RADAR_FRONT', 'RADAR_FRONT_LEFT', 'RADAR_FRONT_RIGHT', 'RADAR_BACK_LEFT',

'RADAR_BACK_RIGHT')

# get map information

basic_mask: MapMask = self.nusc.get('map', self.nusc.get('log', log_token)['map_token'])['mask']

if use_contour:

mask_raster = self.__contour_mask_from_sample(sample_token, base_directory=base_directory)

else:

mask_raster = basic_mask.mask()

# get radar point cloud

points_total = np.zeros((4, 0))

for mod in radar_modalities:

# get data

current_sd_token = current_sample_record['data'][mod]

current_sd_record = self.nusc.get('sample_data', current_sd_token)

current_pose_record = self.nusc.get('ego_pose', current_sd_record['ego_pose_token'])

current_cs_record = self.nusc.get('calibrated_sensor', current_sd_record['calibrated_sensor_token'])

pc = RadarPointCloud.from_file(osp.join(self.nusc.dataroot, current_sd_record['filename']))

points = np.vstack((pc.points[:3, :], np.ones(pc.points.shape[1])))

# Transformations done in from_file_multisweep

# Homogeneous transform from ego car frame to reference frame.

ref_from_car = transform_matrix(ref_cs_record['translation'], Quaternion(ref_cs_record['rotation']),

inverse=True)

# Homogeneous transformation matrix from global to _current_ ego car frame.

car_from_global = transform_matrix(ref_pose_record['translation'], Quaternion(ref_pose_record['rotation']),

inverse=True)

global_from_car = transform_matrix(current_pose_record['translation'],

Quaternion(current_pose_record['rotation']), inverse=False)

# Homogeneous transformation matrix from sensor coordinate frame to ego car frame.

car_from_current = transform_matrix(current_cs_record['translation'], Quaternion(current_cs_record['rotation']),

inverse=False)

# Fuse four transformation matrices into one and perform transform.

trans_matrix = reduce(np.dot, [ref_from_car, car_from_global, global_from_car, car_from_current])

points = trans_matrix.dot(points)

points_total = np.hstack((points_total, points))

# Transformations done in render_sample_data

# Compute rotation between 3D vehicle pose and "flat" vehicle pose (parallel to global z plane).

ref_to_ego = transform_matrix(translation=ref_cs_record['translation'],

rotation=Quaternion(ref_cs_record["rotation"]))

ego_yaw = Quaternion(ref_pose_record['rotation']).yaw_pitch_roll[0]

rotation_vehicle_flat_from_vehicle = np.dot(

Quaternion(scalar=np.cos(ego_yaw / 2), vector=[0, 0, np.sin(ego_yaw / 2)]).rotation_matrix,

Quaternion(ref_pose_record['rotation']).inverse.rotation_matrix)

vehicle_flat_from_vehicle = np.eye(4)

vehicle_flat_from_vehicle[:3, :3] = rotation_vehicle_flat_from_vehicle

viewpoint = np.dot(vehicle_flat_from_vehicle, ref_to_ego)

points_total = viewpoint.dot(points_total)

# Transformations done in render_egocentric_map

# Retrieve and crop mask.

pixel_coords = basic_mask.to_pixel_coords(ref_pose_record['translation'][0],

ref_pose_record['translation'][1])

scaled_limit_px = int(axes_limit * (1.0 / basic_mask.resolution))

# noinspection PyTypeChecker

cropped = crop_image(mask_raster, pixel_coords[0], pixel_coords[1], int(scaled_limit_px * np.sqrt(2)))

# Rotate image.

ypr_rad = Quaternion(ref_pose_record['rotation']).yaw_pitch_roll

yaw_deg = -np.degrees(ypr_rad[0])

rotated_cropped = np.array(Image.fromarray(cropped).rotate(yaw_deg))

# Crop image.

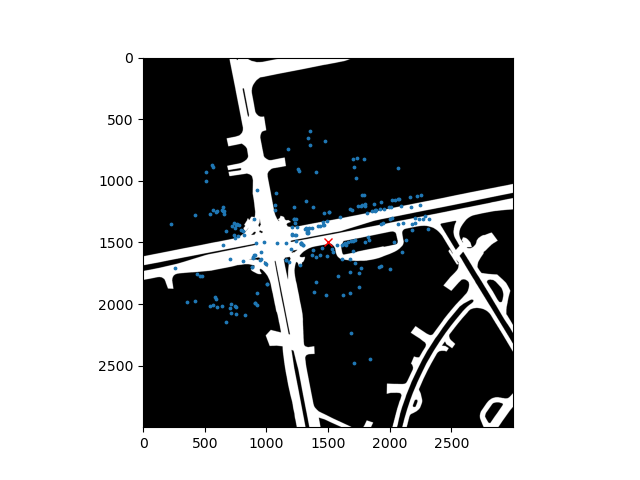

ego_centric_map = crop_image(rotated_cropped, rotated_cropped.shape[1] / 2,

rotated_cropped.shape[0] / 2,

scaled_limit_px)

# Transformation done to compensate for not using the matplotlib.imshow extent paramater

points_total += np.array((axes_limit, axes_limit, 0, 0)).reshape((4, 1))

points_total /= basic_mask.resolution

ego_centric_map = np.flipud(ego_centric_map)

# Plotting

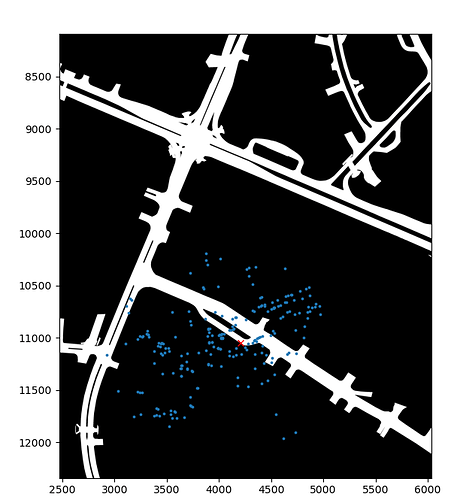

if do_plot:

plt.figure()

plt.imshow(ego_centric_map, cmap='gray', vmin=0, vmax=255)

plt.scatter(points_total[0, :], points_total[1, :], s=3)

plt.plot(axes_limit / basic_mask.resolution, axes_limit / basic_mask.resolution, 'x', color='red')

return points_total[:3, :], ego_centric_map