Hi,

Is there a way to transform the coordinates from the ego_pose information into the global coordinate frame? Thank you very much for the answer.

Transforming the ego_pose coordinates into global coordinate frame

Yes, that’s what the ego_pose table is there for.

# Get reference pose and timestamp.

sample_rec = nusc.sample[0]

ref_chan = 'LIDAR_TOP'

ref_sd_token = sample_rec['data'][ref_chan]

ref_sd_rec = nusc.get('sample_data', ref_sd_token)

ref_pose_rec = nusc.get('ego_pose', ref_sd_rec['ego_pose_token'])

# Homogeneous transformation matrix from global to _current_ ego car frame.

car_from_global = transform_matrix(ref_pose_rec['translation'], Quaternion(ref_pose_rec['rotation']),

inverse=True)Hi,

the ego_pose information is already given into the global coordinate frame, isn’t it?

See tutorial 11. ego_pose

ego_pose contains information about the location (encoded in translation) and the orientation (encoded in rotation) of the ego vehicle body frame, with respect to the global coordinate system.

Tank you for your answer

Hi. That sentence may sound a bit confusing.

What it really means is

ego_pose contains information about the location (encoded in translation) and the orientation (encoded in rotation) of the ego vehicle, with respect to the global coordinate system.

So the answer is yes.

Hi,

thanks for your answer.

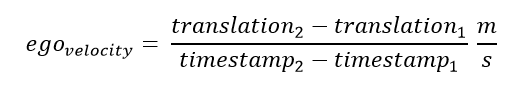

So I want to know, how fast the ego-car ist moving at timestamp t1:

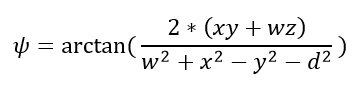

Then I need the yaw-rate (angle °) of the ego-car, I am calculating it like:

Is it right or do you have another way to calculate the ego_velocities from the ego_pose?

I am asking because I want to compare the ego_velocity to the radar-measurements

Best regards

Why not simply use your ego_velocity formula?

Also note that the radar provides both compensated and uncompensated velocities (w.r.t. ego motion).