hi,

Is there a way to only render specific bouning box categories with “with_anns” in render_sample_data ?

With_anns for rendering

@Naveed here’s how I would approach it:

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

from nuscenes.nuscenes import NuScenes

categories_to_render = [

'movable_object.barrier',

'vehicle.car'

]

sample_data_token = 'e3d495d4ac534d54b321f50006683844'

# Load nuScenes.

nusc = NuScenes(version='v1.0-mini', dataroot='/data/sets/nuscenes', verbose=False)

# Load boxes and image.

data_path, boxes, camera_intrinsic = nusc.get_sample_data(sample_data_token) # , selected_anntokens=[])

data = Image.open(data_path)

# Init axes.

_, ax = plt.subplots(1, 1, figsize=(9, 16))

# Show image.

ax.imshow(data)

# Show boxes.

for box in boxes:

if box.name in categories_to_render:

c = np.array(nusc.explorer.get_color(box.name)) / 255.0

box.render(ax, view=camera_intrinsic, normalize=True, colors=(c, c, c))

# Limit visible range.

ax.set_xlim(0, data.size[0])

ax.set_ylim(data.size[1], 0)

ax.axis('off')

ax.set_aspect('equal')

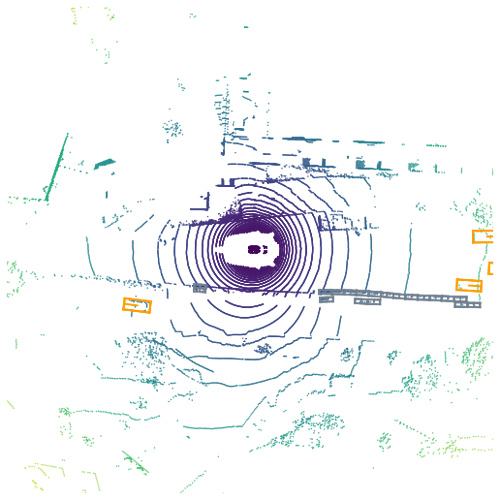

The above would render the following:

Thank you so much for this. In order to get the top lidar view I would have to change the view=camera_intrinsic to a lidar_top view matrix ?

Rendering the top lidar view is slightly more involved, but here’s one way to do it:

import os

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

from pyquaternion import Quaternion

from nuscenes.nuscenes import NuScenes

from nuscenes.utils.data_classes import LidarPointCloud

from nuscenes.utils.geometry_utils import view_points, transform_matrix

categories_to_render = [

'movable_object.barrier',

'vehicle.car'

]

sample_data_token = '9d9bf11fb0e144c8b446d54a8a00184f'

# Load nuScenes.

nusc = NuScenes(version='v1.0-mini', dataroot='/data/sets/nuscenes', verbose=False)

sample_data_record = nusc.get('sample_data', sample_data_token)

# Load boxes.

_, boxes, _ = nusc.get_sample_data(sample_data_token, use_flat_vehicle_coordinates=True)

# Load a single lidar point cloud.

pcl_path = os.path.join(nusc.dataroot, sample_data_record['filename'])

pc = LidarPointCloud.from_file(pcl_path)

# Init axes.

_, ax = plt.subplots(1, 1, figsize=(9, 16))

# Retrieve transformation matrices for reference point cloud.

cs_record = nusc.get('calibrated_sensor', sample_data_record['calibrated_sensor_token'])

pose_record = nusc.get('ego_pose', sample_data_record['ego_pose_token'])

ref_to_ego = transform_matrix(translation=cs_record['translation'],

rotation=Quaternion(cs_record["rotation"]))

# Compute rotation between 3D vehicle pose and "flat" vehicle pose (parallel to global z plane).

ego_yaw = Quaternion(pose_record['rotation']).yaw_pitch_roll[0]

rotation_vehicle_flat_from_vehicle = np.dot(

Quaternion(scalar=np.cos(ego_yaw / 2), vector=[0, 0, np.sin(ego_yaw / 2)]).rotation_matrix,

Quaternion(pose_record['rotation']).inverse.rotation_matrix

)

vehicle_flat_from_vehicle = np.eye(4)

vehicle_flat_from_vehicle[:3, :3] = rotation_vehicle_flat_from_vehicle

viewpoint = np.dot(vehicle_flat_from_vehicle, ref_to_ego)

# Show point cloud.

axes_limit = 40

points = view_points(pc.points[:3, :], viewpoint, normalize=False)

dists = np.sqrt(np.sum(pc.points[:2, :] ** 2, axis=0))

colors = np.minimum(1, dists / axes_limit / np.sqrt(2))

point_scale = 0.2

scatter = ax.scatter(points[0, :], points[1, :], c=colors, s=point_scale)

# Show boxes.

for box in boxes:

if box.name in categories_to_render:

c = np.array(nusc.explorer.get_color(box.name)) / 255.0

box.render(ax, view=np.eye(4), colors=(c, c, c))

# Limit visible range.

ax.set_xlim(-axes_limit, axes_limit)

ax.set_ylim(-axes_limit, axes_limit)

ax.axis('off')

ax.set_aspect('equal')

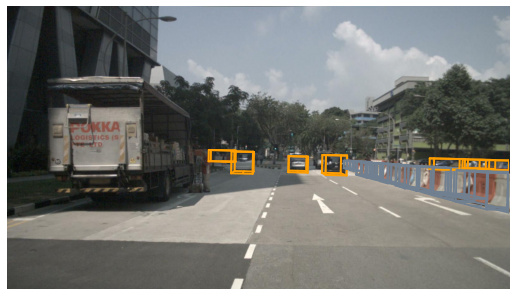

The above would render the following: