Hi, I wanted to ask if it is possible to create a disparity map from front cameras?

Disparity map generation

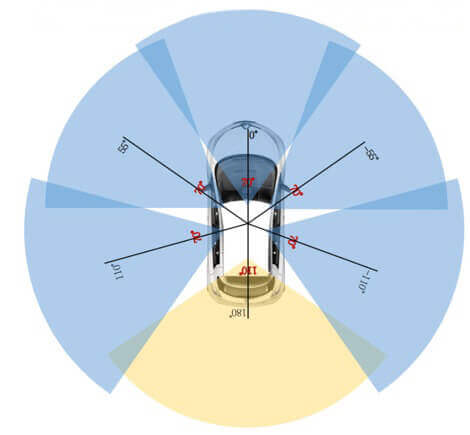

Hi. Strictly speaking we don’t have stereo cameras hence no disparity maps. If you look at this plot you will also see that there is very little overlapping FOV between cameras.

But maybe you can create a fake disparity map by synthesizing a new camera given the existing camera and lidar information? Or you can compute fake stereo between successive frames of the same camera? But then again, why do you need stereo if you have lidar?

That’s unfortunate!! My project consists of detecting pedestrians with stereo camera and radar and I was excited for founding radar database.

Although the solutions you suggested worth the try. Can you suggest any paper for creating a fake disparity map from camera and Lidar?

Thank you for you support !

Hi, I do not know any paper that does this. But I’ve seen works that synthesize images from new viewpoints and I’ve seen other works that do stereo from monocular image sequences. But my idea may be too involved.

Why don’t you start with an off-the-shelf monocular depth estimator rather than stereo? These days they are getting really good.

I know this algorithm named monodepth2 which seems quite good and only uses monocular input as @holger-motional said.

@javierlorenzod thank you for your suggestion !!

@holger-motional I think i’m going to take this direction. I can compare in a first place the algorithm proposed from @javierlorenzod with a stereo camera depth estimator on object detection and see if I get approximately the same results… Or are you aware of some paper who has done the same kind of work?

I am trying to concatenate multiple Lidar scans and project it onto image plane to get a dense point clouds. After that I tried impainting the dense point cloud using NYU depth colorization toolbox to get a depth map somewhat similar to methods used for KITTI. https://github.com/mrharicot/monodepth/blob/master/utils/evaluation_utils.py#L169

For single LIDAR scans i could get a depth map although it is unpleasant. But on projecting multiple LIDAR scans on image plane the point clouds gets accumulated at single place.

Does anyone tried this approach before for getting depth map groundtruth using LIDAR scans on Nuscenes.

Any help would be highly appreciated.

@ben0110 Have you tried monodepth2 on Nuscenes dataset for estimating depth maps from monocular images.