I have been working with LiDAR pointclouds and their associated bounding boxes. I was a bit confused about what the various reference frames are and what objects are part of them.

A bit more specifically I am confused by this block of code

‘’’

# Points live in the point sensor frame. So they need to be transformed via global to the image plane.

# First step: transform the point-cloud to the ego vehicle frame for the timestamp of the sweep.

cs_record = self.nusc.get(‘calibrated_sensor’, pointsensor[‘calibrated_sensor_token’])

pc.rotate(Quaternion(cs_record[‘rotation’]).rotation_matrix)

pc.translate(np.array(cs_record[‘translation’]))

# Second step: transform to the global frame.

poserecord = self.nusc.get('ego_pose', pointsensor['ego_pose_token'])

pc.rotate(Quaternion(poserecord['rotation']).rotation_matrix)

pc.translate(np.array(poserecord['translation']))

# Third step: transform into the ego vehicle frame for the timestamp of the image.

poserecord = self.nusc.get('ego_pose', cam['ego_pose_token'])

pc.translate(-np.array(poserecord['translation']))

pc.rotate(Quaternion(poserecord['rotation']).rotation_matrix.T)

# Fourth step: transform into the camera.

cs_record = self.nusc.get('calibrated_sensor', cam['calibrated_sensor_token'])

pc.translate(-np.array(cs_record['translation']))

pc.rotate(Quaternion(cs_record['rotation']).rotation_matrix.T)

‘’’

Here it says in the first step it takes points from the pointcloud, which are in the point sensor frame and transforms it into the ego vehicle frame for the timestamp of the sweep, and then transforms it into the global frame. However, I am confused because in a separate function that I use to figure out which points are in a box

‘’’

def points_in_box(box: ‘Box’, points: np.ndarray, wlh_factor: float = 1.0):

"""

Checks whether points are inside the box.

Picks one corner as reference (p1) and computes the vector to a target point (v).

Then for each of the 3 axes, project v onto the axis and compare the length.

Inspired by: https://math.stackexchange.com/a/1552579

:param box: <Box>.

:param points: <np.float: 3, n>.

:param wlh_factor: Inflates or deflates the box.

:return: <np.bool: n, >.

"""

corners = box.corners(wlh_factor=wlh_factor)

p1 = corners[:, 0]

p_x = corners[:, 4]

p_y = corners[:, 1]

p_z = corners[:, 3]

i = p_x - p1

j = p_y - p1

k = p_z - p1

v = points - p1.reshape((-1, 1))

iv = np.dot(i, v)

jv = np.dot(j, v)

kv = np.dot(k, v)

mask_x = np.logical_and(0 <= iv, iv <= np.dot(i, i))

mask_y = np.logical_and(0 <= jv, jv <= np.dot(j, j))

mask_z = np.logical_and(0 <= kv, kv <= np.dot(k, k))

mask = np.logical_and(np.logical_and(mask_x, mask_y), mask_z)

return mask

‘’’

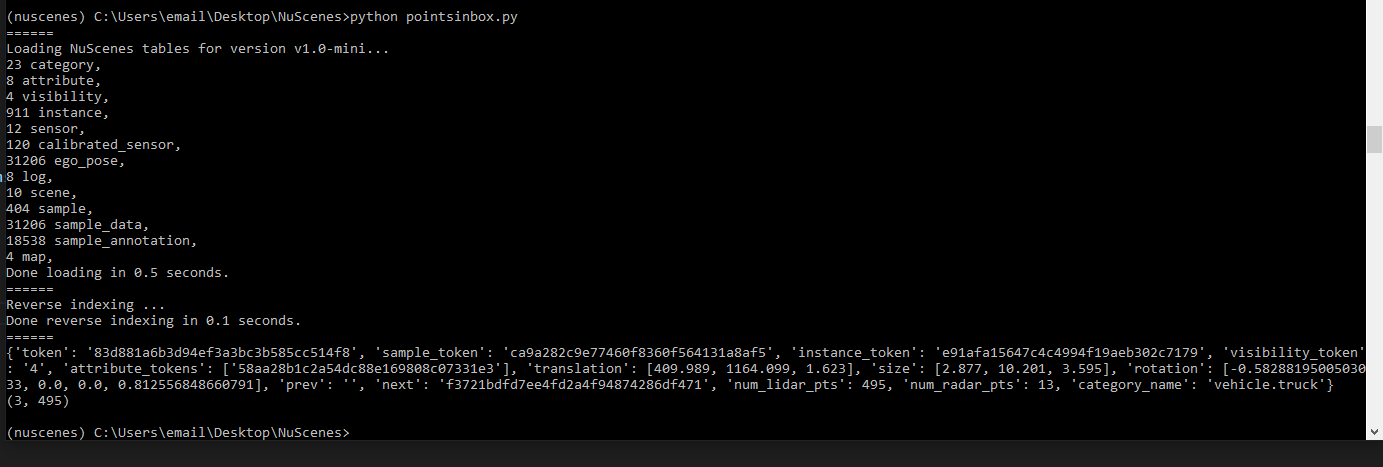

I do not do any transformations on either the pointclouds nor the box. Additionally, to find the distance between the box and the ego_vehicle, I saw in another post that I could just take the euclidean distance between the center of the box and the translation of the ego_pose as they are both in global coordinates. This confuses me because I was under the impression that the pointcloud would be in a different reference frame than the box.

As such, I was hoping to get some details on what exactly the various reference frames (point sensor frame, ego frame for timestamp of sweep, global frame) are referring to, how they are related, and which objects are in particular reference frames in context of the code blocks above.