Hi. I would like to have the yaw angles of the objects with respect to the camera pose not with respect to the map:

E.g.

yaw 0 degrees: frontal view of the object.

yaw 180 degrees: back view of the object.

How can i obtain it for each object in a scene?

Rotation wrt the camera pose

Hi.

Thanks for your interest in nuScenes.

Just use the function get_sample_data which projects the boxes to the sensor with the provided sample_data_token.

Now for every box you can access box.orientation which gives you the quaternion.

You can compute the yaw angle using: https://github.com/nutonomy/nuscenes-devkit/blob/master/python-sdk/nuscenes/eval/detection/utils.py#L162

Note that our annotations only have a yaw angle, not pitch or roll. Since the global coordinate frame and the sensor coordinate frames are not perfectly aligned, this may introduce a very small error.

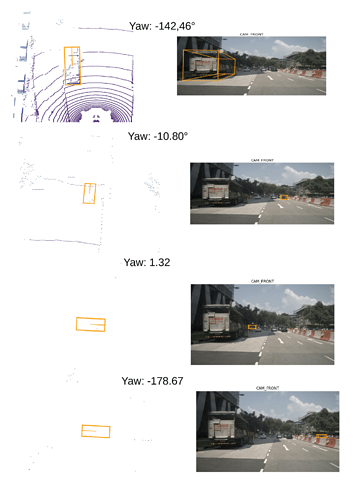

Hi! thank you. I have tried that function but it seems that it does not work. The only thing that i have added is the conversion between radians to degrees ( the factor 180/pi to the yaw angle). This image is what i have obtained. I’m not able to understand the signs of the angles. What is the positive direction of the angles and the negative one ? I’d like that 0 angle corresponds to a vehicle is coming at you ( from the camera point of view).

Thanks in advance

Hi. Normally the yaw=0 orientation is to the right. So if you want to rotate it to be forward, you would need to add 90 degrees. I think in your visualization something is wrong, but I am not able to figure out what without the code.

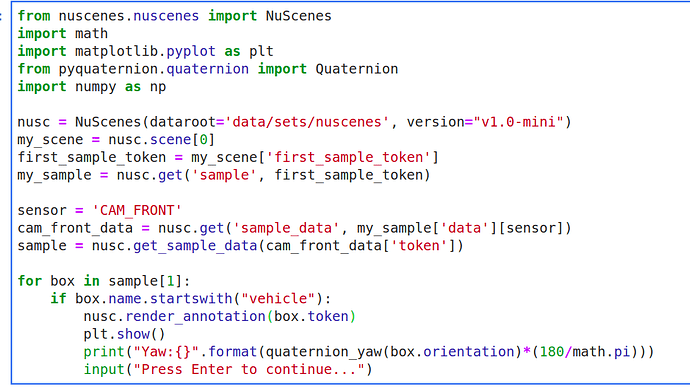

Hello, This is the code. I am not able to figure out the error. The function quaternion_yaw() is your function.

Okay. I think I know what the problem is. The comments in quaternion_yaw say:

Note that this only works for a quaternion that represents a box in lidar or global coordinate frame. It does not work for a box in the camera frame.

I suggest you just add your own method to compute yaw in the camera frame, by swapping the coordinates accordingly in:

# Project into xy plane.

v = np.dot(q.rotation_matrix, np.array([1, 0, 0]))

# Measure yaw using arctan.

yaw = np.arctan2(v[1], v[0])

Thank you very much for your reply. So the problem is how the image is projected in the camera frame through the function get_sample_data() or it is enough to change the code of quaternion_yaw()? When i solve the problem i will post the solution.

Just modify a copy of the quaternion_yaw function.

The up vector should be [0, -1, 0] instead of [1, 0, 0].

Then you need to compute the arctan between v[0] and v[2] (perhaps one of the signs is negative).

See the coordinate frames at https://www.nuscenes.org/public/images/data.png

I think there is another way to do it, that is to rotate the object in the camera frame back to the LiDAR frame by using the following matrix

R =

[1, 0, 0]

[0, 0, 1]

[0, -1, 0]

Then you can use the quaternion_yaw() as usual.

Don’t use these custom rotation matrices. The sensors are not perfectly rotated by 90 degrees, but rather 89 degrees or so, which will cause incorrect results. Work with the corresponding transformations.